The Quantum

Quantum Physics

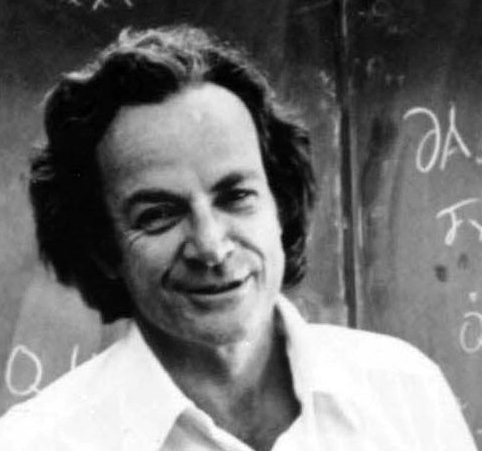

Richard Phillips Feynman was an American theoretical physicist known for his work in the path integral formulation of quantum mechanics, the theory of quantum electrodynamics, and the physics of the super-fluidity of super-cooled liquid helium, as well as in particle physics. Along with others he received the Nobel Prize in Physics in 1965. He developed a widely used pictorial representation scheme for the mathematical expressions governing the behavior of subatomic particles, which later became known as Feynman diagrams.

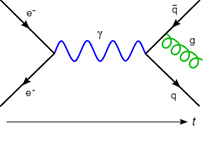

In quantum physics, Feynman diagrams are used as a pictorial representation of the mathematical expressions describing the behavior of subatomic particles. The scheme is named after him. The interaction of sub-atomic particles, cause and effect, can be complex and difficult to understand intuitively but are clearly represented with the use of Feynman diagrams which give a simple visualization of what would be an abstract formula.

In this Feynman diagram, an electron, e-, and a positron, e+, annihilate and produce a photon which is represented by the blue sine wave in this diagram. It decays and becomes a quark–antiquark pair, after which the antiquark radiates a gluon. That’s what the green helix represents.

Notice the direction of time noted by t. This clearly defines a cause and effect whereas most other physical phenomenon represented by purely algebraic or differential equations are completely reversible and do not seem to consider cause and effect and often leave out time and its direction as well.

Causality is believed to contain an explanation of past events, predictions of future events along with a process that defines the direction or function of the process going from cause to effect. This is called information by physicists. With relativity, information is the signal that enforces causality. For example, if event A causes event B, then some signal must travel from A to B. By the way this information cannot be lost. It may be jumbled beyond recognition but never lost.

Nuclear Decay

This is also known as radioactive decay or simply radioactivity. It is the process by which the nucleus of an atom loses energy by emitting radiation such as a neutron, including alpha particles (two protons and two neutrons), beta particles (fast-moving electron) and gamma rays (very powerful light).

Radioactive decay is a random process at the level of single atoms meaning that, it is impossible to predict when a specific atom will decay. But given a large collection of atoms the decay rate can be calculated from their measured decay constants or half-lives. Nuclear decay demonstrates a direction in time and radioactive decay occurs in unstable atomic nuclei. These are ones that don’t have enough binding energy to hold the nucleus together due to an excess of either protons or neutrons.

Entanglement

Back in 1935, Einstein and physicists Boris Podolsky and Nathan Rosen entangled two particles. What is meant by entanglement is their physical properties are linked across distances, spooky action at a distance as Einstein called it, and what you do to one particle, such as measure its spin, would impact the other. Intuitively, you'd think that this is not possible given Einstein's relativistic (modified Newtonian classical) laws of physics at large scales. But these physicists discovered something which is now called the Einstein-Podolsky-Rosen (EPR) paradox and later experiments using individual particles proved the physicists correct on this point.

A pair of quantum systems may be described by a single wave function which encodes the probabilities of the outcomes of experiments that may be performed on the two systems, whether jointly or individually. At the time the EPR was written, it was known from experiments that the outcome of an experiment sometimes cannot be uniquely predicted. An example of such indeterminacy can be seen when a beam of light is split using a half-silvered mirror. One half of the beam will reflect, and the other will pass. If the intensity of the beam is reduced until only one photon is in transit at any time, whether that photon will reflect or transmit cannot be predicted quantum mechanically, also if you perform a test on one particle coming out of the splitter, one that is entangled, this will affect the other one.

The routine explanation of this effect was, at that time, provided by Heisenberg's uncertainty principle. Physical quantities come in pairs called conjugate quantities. Examples of such conjugate pairs are (position, momentum), (time, energy), and (angular position, angular momentum). When one quantity was measured, and became determined, the conjugated quantity became indeterminate. Heisenberg explained this uncertainty as due to the quantization of the disturbance from measurement.

Heisenberg's principle was an attempt to provide a classical explanation of a quantum effect sometimes called non-locality. According to EPR there were two possible explanations. Either there was some interaction between the particles (even though they were separated) or the information about the outcome of all possible measurements was already present in both particles.

A new experiment, published April 26 in the journal Science, shows that the effect still occurs using even a clump of nearly 600 supercooled particles.

It's not surprising, exactly, that a paradox originally framed in terms of two particles also occurs for clumps of hundreds of particles. The same physics at work in a very small system should also work in much larger systems. But scientists perform these ever-more-complex tests because they help confirm old theories and narrow down the ways in which those theories might be wrong. And they also demonstrate the capability of modern technology to put into action ideas that Einstein and his colleagues could think about only in abstract terms.

To pull off this experiment, the researchers cooled about 590 rubidium atoms (give or take 30 atoms) to near absolute zero.

At that temperature, the atoms formed a state of matter called a Bose-Einstein condensate, which, as Live Science has previously reported, is a state of matter in which a large group of atoms become so entangled that they start to blur and overlap with one another; they begin to behave more like one large particle than lots of separate ones. Quantum physicists love to experiment with Bose-Einstein condensates because this kind of matter tends to demonstrate the weird physics of the quantum world at a large enough scale for the scientists to observe it directly.

In this experiment, they used high-resolution imaging to measure the spins of different chunks within the soup of rubidium atoms. The atoms in the condensate were so entangled that the physicists were able to predict the behavior of the second chunk by studying only the first. Both chunks of atoms, they showed, were so entangled that the behavior of the second chunk was in fact more knowable when only the first was observed, and vice versa.

The EPR paradox had come to life, on a relatively massive scale for the quantum world.

Live Science Link to ETR Paradox>

Biology

Biology is full of cause and effect. It seems this is its major focus. The evolutionary biologist’s main focus appears to be asking ‘how come this happened?’ The functional biologist is always trying to answer, ‘how things work?’ The rate of evolution is driven by the rate that the species reproduces, the rate of change of the species environment and the effects of that change on that species.

Some of these ways of explaining cause and effect seem timeless, the explanations of the function rarely if at all mention the time it takes for the cause to make the effect. It’s almost ignored.

So.. What determines the speed of Causality?

With Newtonian physics, the speed or time of the causation can be calculated from the mentioned forces. Since force is the product of mass and acceleration, we can calculate the time for the effect to occur.

Because force is the product of mass and acceleration in classical physics f=m x a and using Newton's force caused by gravity fg = G x (M m)/r2 an replacing fg with f we get ag = G x M/r2 where G is the gravitational constant and M would be the mass of the planet you are on. By calculating ag we could then find the time it would take for an object to fall a given distance. Our effect here is the object falling a certain distance with our cause being the force of gravity acting on the object.

If we look at the above simple classical formula f = m x a we could say that, from a cause-and-effect perspective that it is written out of sequence. Perhaps we should say that a = f / m since that an acceleration seems to be the effect where the cause is the product of force and the inverse of mass. Acceleration is an emergent concept due to the force acting on a mass. But we would need to explore this concept more, later. This is not the root of causality, more of an emergent effect. It goes much deeper than that.

As was mentioned earlier Einstein said that the restriction on causality comes from the statement c x t > D where c is the speed of light t is the time for the effect and D Is the distance of the affected object. It makes more sense to write it this way: t >D/c . This clearly shows that the time it takes for

Comments

Post a Comment